Research Design & Questions

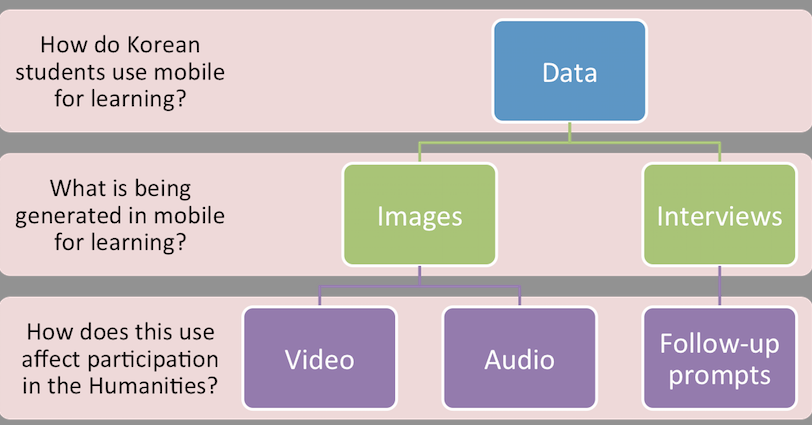

As briefly as I can muster, my research study is focused on how (generally) Korean graduate students in the Humanities use mobile technology to support their learning, informally or formally. My research questions towards that end are as follows:

- How do graduate students in higher education in the humanities in South Korea use mobile technology to support their learning practices?

- What mobile artifacts (compositions of text or multimedia designed to make meaning for graduate students in their disciplines) are being produced in mobile technology in Korean higher education in the humanities?

- How does that use of mobile technology affect their participation in the Humanities?

There are several in-between layers here justifying this research design, but to answer these questions I eventually settled on three distinct data collection activities lumped into two phases.

Phase 1

- Interviews-questions, answers, transcripts, the vanilla flavor of all research design.

- Media Artifacts generated through mobile- these are images, video, and audio generated by these students to either augment (or in some cases simply be the knowledge product of that learning) or to represent how they learn. There is a distinction between those two that I won’t bore you with. In this activity, students submitted an undefined (by me) amount of media generated through their mobile technology.

Phase 2

- Prompts-after a cursory analysis of the data (with a sharp eye on the lookout for emerging themes), I generate a series of questions delivered one by one via KakaoTalk (an absolutely dominant force in the Korean messaging application market) to test my analysis and to have them expand on their practices and intent in generating this data.

Media Analysis

Analysis of the interview transcripts is relatively straightforward (using a narrative analysis approach), as are the prompts in Phase 2. The media is being transcribed and analyzed with a few different strands of multimodality. I won’t bore you with too much detail here, but here are some references that are forming at least part of the transcription.

- Kress, G. and Van Leeuwen, T. (2006), Reading Images: The Grammar of Visual Design (Second Edition). London: Routledge.

- Burn, A. and Parker, D. (2003a), Analysing Media Texts. London: Continuum.

- Burn, A. & Parker, D. (2001) Making your mark: Digital inscription, animation, and a new visual semiotic. Education, Communication & Information, 1 (2) 155-179.

- Newfield, D. (2009) “Transduction, transformation and the transmodal moment”. In Jewitt, C. (ed.) The Routledge Handbook of Multimodal Analysis. London: Routledge.

So I have approaches for imagery (Kress et al), approaches for video (Burn & Parker, primarily) and even a concluding approach that discusses the participants’ seemingly endless capacity for transducing (transduction) themselves from mode to mode rather effortlessly. All except audio.

Now the problem with this audio is that they aren’t speech acts. They are ambient audio pieces submitted by many (surprisingly) of the participants that document any number of things related to their learning spaces (several submitted a brief explanation of what the sounds were, in text). Some are sounds of motion (buses, trains, horns, etc.), some are of stillness (a lapping lake in a park, birds), some are of their home spaces (keyboard clicks, analog clocks, shuffling of feet), etc. Regardless of the fact they aren’t speech acts, they are compositions. They are selected and composed and presented as the creator intended them to be. They aren’t accidents (judging by the textual descriptions of what many of them provided). And so I am at a bit of a loss for generating an analytical approach to them aside from taking on face value what they described them as (some, but not all).

I want to use this audio to triangulate the findings from the text, the images, and video as the audio is more stark, more immediate, a bit more of an emotional fabric to it. Since I am concerned with participation in the Humanities and how mobile affects that, I think this audio could provide the emotional content to complement the intellectual content emerging from the other data. But as far as I can tell, there isn’t much by way of ambient audio analytical techniques. The work that London Sound Survey does is an excellent guide, especially as a comprehensive field guide to ambient audio in and around the city across a few different axes. What is needed now, I think, is a means to link that audio data to the video and imagery.

By way of illustration, there is a difference between these two artifacts, image and audio, both in their impact, their meaning, and their composition. Yet they are perfectly complementary.