James Lamb, Jeremy Knox, and I are presenting at BreMM17 in Bremen in September on our mobile learning unrehearsed group walk idea that we have explored in several different locations and with varying levels of ‘instructional’ control: in Edinburgh, in London, and now here in Bremen. Pekka and I have done similar activities in Helsinki and Seoul. All exploring the idea of mobile learning in the field, in the open, in response to the lived world of the participants. Generally these are unscripted affairs where predilection and research interest guide navigation and exploration. We let a general disposition (evoking Bourdieu’s habitus) guide our journey and keep reflective practice foregrounded as often as we can. These are multimodal explorations so we attempt to capture and critique as many streams of data as possible and reflect on the limitations and capacities presented by the technology in this process.

For Bremem, we are exploring a slightly different take, one where prompts are delivered at intervals to dispersed groups of participants. These prompts align with the conference themes of multimodality so they are exploring particular modes, or passages from seminal works, or even how multimodality informs the composites of urban space and regional identity. These are scripted and timed (10-15 minutes for each), but participants are free to explore as they see fit, in any direction or inclination they wish to pursue. The tension in this model is the automated and/or instructional elements of this, a tension we wished to explore a bit in the field. It builds on the work of colleagues at the Centre for Research in Digital Education (including Jeremy) on their work with Teacherbot.

So essentially we are looking to explore particular themes and questions (delivered as prompts) in a disparate aggregation (solo participants or groups that can come together and disintegrate as they see fit) at routine intervals (10-15 minutes) with GPS coordinates delivered to reassemble to debrief at the end of the activity. As all of us will be participants (and to a lesser degree, an instructional presence in the group), we are exploring how to incorporate automated elements into this process and spaces where we can have a productive and secure exchange.

One possibility I was exploring was creating a mobile application from scratch via TextIt, a nifty SMS application tool that works across any conceivable type of device. This proved a bit cumbersome in terms of accounting for collaborative space around a set of fairly diverse media types. I needed this activity to take place within the group (secure) and for the group to work with each other (collaborative) across audio, image, video, text. Textit is perfect for broad 1:1 communication (and yes it can be assembled for group activity as well) but for a one-off configuration for a conference, it seemed a bit unwieldy.

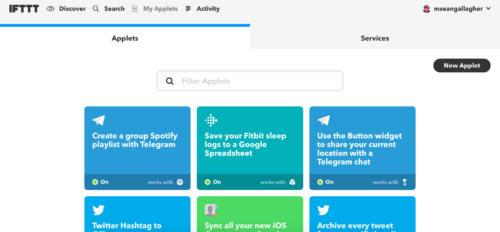

Then I explored daisy-chaining a series of triggers via IFTT and Zapier. This is basic if this then that (IFTT) logic like if App A posts a photo, update App B and so forth. This logic can get fairly sophisticated (Zapier in particular). I created and/or borrowed several of these logic triggers for Bremen, but most I had been using before (in particular saving tweets or hashtags to Google Sheets for research).

So the first and third there are to be used in Bremen. The second is because I am a poor sleeper and I was too lazy to remove for this screenshot. So I can share my location to reassemble participants when needed during the excursion. I can use the Spotify trigger to ask participants to co-create a playlist in response to particular prompts. Never doubt the power of music to shape the structure of how we interpret space. It is light touch and playful as well, which helps combat fatigue.

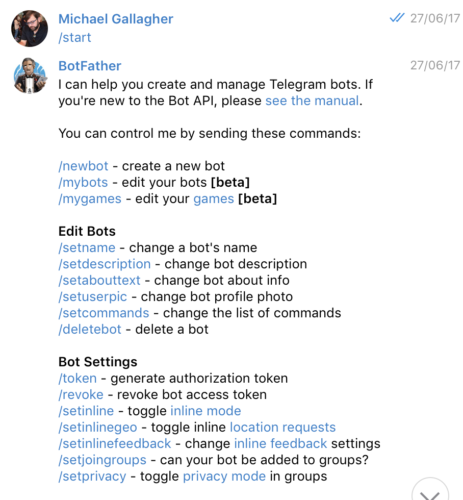

Now I needed a space to conduct this activity. I balanced the needs of the activity itself, the ethical issues surrounding this type of activity (we are exposing participants to a sophisticated and ubiquitous surveillance apparatus), and the need for some sort of controlled centralised space to interact around the data generated. There are ways to do this without a centralised space but I defaulted to a position where participants would feel most comfortable knowing their activity was fairly secure. As of now, I am leaning towards Telegram as I use it for many other projects with NGOs who require some degree of security. It is still a risk but a risk that will be communicated to participants. It is a pain to have to install something for the activity itself but again this is a calculated risk assessment.

So the goal here was to bring these logic triggers as discussed into a centralised space. Cue the chatbots.

- From Telegram, I created a Group for the activity.

- From IFTT, I linked the trigger to the Telegram Group.

- I invited and started the bot in Telegram.

So now the IFTTT triggers I associate with this Telegram group are available to the group. For example, I have a trigger here for participants to add a song to a Spotify playlist based on particular prompts or activity. I then provide the Spotify playlist to everyone as an artifact from and of the event. There are limitations in terms of exporting all the data from the group (less so for a Telegram Channel) but for now this is good enough for Bremen. I will field test it ahead of the event. This is as basic a configuration as is possible with chatbots. There is much much more to do. The beginnings of a very long command list from Chatfuel.

So there is much to explore here without getting too overtly technical. Issues of automation vs. autonomy, of intervals vs. open-ended exploration, structured prompts vs. serendipity. The role of instruction vs. individual exploration. I will likely bring some of this chatbot activity into my work in ICT4D (a growing use case is building there). The original submission for the Bremen conference is below. As I said, we are not sure we are going this direction but wanted to share what we found so far.

Multimodality and Mobile Learning in Bremen’

In this paper-as-performance we will undertake an unrehearsed group walk – a ‘multimodal dérive’ – during BREMM17. Through this exercise we intend to demonstrate that bringing together multimodality and mobile learning provides valuable opportunities for investigating our relationship with the urban, which in turn provokes questions about the social, cultural and political issues of our time. Instead of a conventional paper, our contribution will take place in the street and with an attention to the full range of semiotic material from which emerge impromptu sites for learning (Sharples et al., 2009).

Our excursion will also make the case for a nuanced understanding of ‘the digital’ within multimodal research. By using our mobile devices to navigate and record a path through the city we will challenge the dualistic binary that conceptualises technology either as driver of change or tool for conveying meaning. Instead, our experience will re-iterate the co-constituting nature of human and technology, which in turn asks questions about how we gather and understand multimodal data in an increasingly digital world.

While Kress and Pachler (2007) have recognised the compatibility of multimodality and mobile learning, little work has sought to exploit any conceptual and methodological common ground. By bringing multimodality in-step with mobile learning we will draw attention to the way that our understanding of the city exists at the intersection of an assemblage of human and non-human actors. This includes our personal interests and histories, the opportunities and limitations presented via code and algorithm within our technological devices, and a wider sphere of resources that shape our experiences at a moment in time: weather, hunger, traffic, time. Using conceptual work by De Souza e Silva & Frith (2013), we see urban space not as static containers of meaning waiting to be analysed, but rather as relational to an assemblage of agencies that go beyond what can be seen, heard and touched.

Bibliography

- De Souza e Silva, A., & Frith, J. (2013). Re-narrating the city through the presentation of location. The Mobile Story: Narrative Practices with Locative Technologies, London, NY: Routledge.

- Kress, G. & Pachler, N, (Eds.) (2007). ‘Mobile Learning: Towards a Research Agenda’. WLE Centre, Occasional Papers in Work-based Learning 1. Available at: http://eprints.ioe.ac.uk/5402/1/ mobilelearning_pachler_2007.pdf

- Sharples, M., Arnedillo-Sánchez, I., Milrad, M., & Vavoila, G. (2009). Mobile learning: Small devices, big issues. In N. Balacheff,, S. Ludvigsen, T. De Jong, A. Lazonder, S. Barnes, & L. Montandon (Eds.), Technology-Enhanced Learning (pp. 233-249). Berlin, Germany:Springer.

Mobile Learning in Bremen and Chatbots https://t.co/mobDbEkpYm tieing learning to place, interest, direction, reflection

#mLearning in #Bremen and Chatbots https://t.co/KC79ZVTp8i #BreMM17 https://t.co/c0BW29ynhX

Ideas on @james858499 & my #mlearning activity in #Bremen as part of #Bremm17. Some manual/automated bits w@IFTTT… https://t.co/bb1lHXwwE5

Meeting w/ @james858499 today to plan for #BreMM17: #mLearning in Bremen and Chatbots https://t.co/24ErUNkpUX #mscde https://t.co/MiJFObQSGD

RT @mseangallagher: Meeting w/ @james858499 today to plan for #BreMM17: #mLearning in Bremen and Chatbots https://t.co/24ErUNkpUX #mscde ht…

[…] for Research in Digital Education. Just yesterday, James Lamb and I were using Telegram to pilot our mLearning idea for the upcoming Bremm17 Conference in Bremen. I have been circling this subject a bit in my own […]