- Annotation, mLearning, and Geocaching Part #1: Scenarios and Technical Requirements

- Annotation, mLearning, and Geocaching Part #2: The Use Case

- Annotation, mLearning, and Geocaching Part #3: Annotating Maps

- Annotation, mLearning, and Geocaching Part #4: Annotating Text and Beyond

- Annotation, mLearning, and Geocaching Part #5: Geocaching and Enacting the Use Case

At this point in the series we have identified our requirements and conducted a general survey of existing tools. Though we have not found a candidate tool that satisfies all of our requirements, there is still hope in the form of the Open Annotation Data Model. The model’s extensible, interoperable framework allows for the generation of rich associations between resources—beyond simple text on text annotation–and the ability to share these associations across platforms, which will be critical for collaboration across, or even within, institutions.

But despite the flexibility of the model, and a few custom implementations of rich annotation demonstrated by Genius and Hypothesis, there are significant gaps between our use case and the realities of the software on offer today. Furthermore, most of the text annotation tools today suffer when accessed via a mobile device. So, how might we find a path forward?

One approach we might consider is to separate all of our activities, supporting each with whatever tools are available. Let’s break it down.

- Ability to georeference a historical map producing a reference layer in our preferred map application and enable a bridge between location names mentioned in the text and modern names of these locations

This requirement is already within reach. See #3 Annotating maps for a demonstration of how to use Maphub to associate a historic map (in this case, a map of of Seoul, Korea) with spatial locations in the modern world, thus enabling a bridge between location names mentioned in our text and their modern equivalents.

- Ability to annotate a particular location on a map, defined by a set of geographic coordinates

- Ability to navigate these annotations in the real world, ideally using a mobile application

It is relatively simple to export your data from Maphub as a KML file and then to import this file into Google Earth. This gets you part of the way there, as you are now able to navigate a map and add placemarks while viewing your georectified map as an image layer. Unfortunately, this method is less successful when using the mobile version of Google Earth. We might instead use Google’s My Maps tool, which allows users to import KML/KMZ files, but the particular style of KML generated by Maphub (containing a link to an image hosted by Maphub and not much else) is not supported. As mobility is required for our use case a workaround is necessary:

- Export Maphub KML to Google Earth

- Create a folder

- Create placemarks and add to folder

- Select folder and export coordinates to KMZ

- Open Google My Maps, create new map, and import KMZ

In this process you lose your historic image as an overlay, but it has still hopefully proven useful in identifying key locations from the text before our users begin their fieldwork.

- Ability to contribute annotations to the map (e.g. photos, video, or text)

- Ability to annotate with different mediums (text, images, video)

Let’s postpone our annotation work while in the field, and instead collect our media using using native applications for capturing text, photos, and video. Assuming the application we select has geotagging capabilities the media we produce can–at a later point–be aligned with our map and/or our source text as annotations. Though there may be some work to pull clusters of nearby geotagged items into a single annotation.

- Ability to amend or add to annotations on the map

Adding annotations while in the field may occur organically as users create media in locations that were not previously identified in the earlier steps. Later work will be required to link these new map annotations with the source text. Amending annotations is more difficult as placemarks in Google My Maps are not editable using the mobile application.

- Ability to annotate across mediums (maps and text)

In #4 Annotating text and beyond we discussed how we might model annotations which target two resources, a map and a text. In practice, this is rather difficult. Each Hypothesis annotation can be accessed via a URL. Similarly, every placemark on a Google Map can be accessed via a URL. In turn, the media we produce can be published locally, or to the public web, and also accessed via URLs. We can then associate these URLs in such a way that a user can navigate from either a map or the source text and locate the relevant annotations (text or media). Using only the tools described above this might be achieved simply by embedding all associated URLs within each placemark, each text annotation, each piece of media, but such a process would be tedious and the results would be difficult to navigate. No clear solution emerges without custom development.

- Optionally, serialize our resulting data using the Open Annotation standard

Again, custom code would be required. A developer should be able to write an ETL script that would enable users to serialize the data resulting from the fieldwork (stored in the various applications in the toolset described above) using the Open Annotation standard format. But then, what could you do with this data? If there are no tools to view the data, then the value of this serialization is rather limited.

In summary, a proposed workflow for annotating and navigating literary maps follows. Naturally, it is imperfect in many regards, but perhaps even this limited workflow would grant us valuable insight into how the use case might behave in the real world, with real people, hopefully resulting in a better set of software and architecture requirements.

- An administrator generates pre-coordinated annotations to establish primary links between text and map, perhaps using a historic map to make her work easier

- Users take this pre-coordinated map into the field and travel to the established locations using a mobile optimized mapping application such as Google Maps

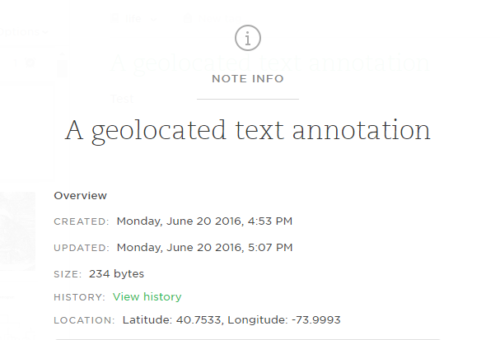

- Users collect media using native applications for capturing text, photos, and video. For example, Evernote can be used to generate a geo-located text note. Similarly, most photos and videos captured by a mobile device will have geolocation information embedded in their metadata

- When users return from the field the administrator collects all of the geolocated media and assembles the annotation data according to the Open Annotation Data Model.

Annotation, #mLearning, & Geocaching Part #6: A path forward for annotating literary maps by @rexsavior https://t.co/X7sN6utesj

RT @mseangallagher: Annotation, #mLearning, & Geocaching Part #6: A path forward for annotating literary maps by @rexsavior https://t.co/X7…